Consultation Activity with JISC Experts

JISC Pedagogy Experts' Group Meeting, Aston University, 26th October 2006

Background

Our aim in this exercise was to validate i) the structure and ii) the vocabulary embedded in the Phoebe tool.

Previous work (in the LD Tools project) showed us the teachers take a multiplicity of paths through the planning process; therefore, we wanted to ensure that the paths created by links and tags within Phoebe map to real-world processes. In addition, different teachers across (and even within) sectors have differing vocabularies for talking about the components of the planning/learning design. This makes it very difficult for a general-purpose tool to map naturally and instantly to users’ thought processes. At best, it may oblige them to assimilate a new vocabulary; at worst, it will deter them from using the tool at all. The problem is greatest at the very level that the planning tool addresses: namely, the individual “chunk” of learning for which the teacher is creating a plan. Within FE and ACL this “chunk” can be referred to as a lesson or learning session; within HE it tends to be named according to format: viz. lecture, seminar, tutorial, lab class, practical.

Key questions

- What components do teachers take into consideration when planning a lesson in a technology-rich environment?

- How do teachers identify these components? Do they recognise a given set of terms, or do they have alternative individual vocabularies?

- How do teachers move among these components when planning a particular lesson, and how do they characterise the relationships between components and/or clusters of components?

Method

The activity was carried out during a meeting of the JISC Pedagogy Experts’ Group on 26th October, 2006, within a period of 1¼ hours. It was designed to be carried out in groups of three, with one person describing their approach to planning a lesson (either in general, or a specific lesson) to the others, who would record the description both verbally, by writing down answers to a set of pre-defined questions and graphically, by laying down “cue cards” on a sheet of flipchart paper and using felt pen to indicate the relationships among them: flow, influence etc.

For detailed information about the method, see the activity instruction sheet and the set of structured questions given to participants.

The “cue cards” were derived from the components of a lesson plan identified in the Learning Design Tools project and the Practitioner-Informant interviews of the Phoebe project. We narrowed the number of components down to 22 using the following criteria:

- How commonly they appeared to be used (on the basis of data already captured).

- Whether we had reason to suspect that they might be problematic. For example, “Teaching approaches” begs some form of engagement with theory, yet the extent to which teachers explicitly consider theoretical issues when planning is not clear from our previous findings.

- Doubt over the actual label used: i.e. is a particular term generally understood and/or used? If not, what is a preferable term?

- Uncertainty regarding the sector(s) to which the component is relevant (e.g. is differentiation a consideration in HE planning?)

- Need for more detailed specification of sub-components: e.g. we collected a number of items under the heading “Learner characteristics”, but needed to know if we had omitted any.

The 22 cards used were:

| Learning styles | Differentiation | Accessibility | Aims and objectives |

| Learning outcomes | Resources / Tools | Assessment criteria | Teaching approach |

| Learning activities | Extension / Reinforcement / Remedial activities | Assessment activities | Preparation by students (e.g. advance reading) |

| Reflective activities | Location | Prerequisites | Individual work / group work |

| Contingency plan | Other staff involved (teaching, support etc.) | Timings for activities (start/end; duration) | Post-assessment feedback to students |

| Content | Curriculum |

Results

Demographic data

Approximately 48 people participated in the activity and appeared to have worked in groups of four, as we received descriptions of 12 processes. These came from practitioners in the following sectors:

| HE | 9 |

| FE | 1 |

| HE + FE | 1 |

| WBL | 1 |

Processes covered a range of subject areas, incl. art, economics, study skills, health, computer graphics, problem-solving for maths and physics students. However, not interested in differences in planning learning sessions in different subject areas.

All were describing their own approach to planning. Eight people were planning at the level of an individual lesson (i.e. as requested), two were planning whole courses or modules, and the level at which the final two were planning was unclear. However, the processes they described appeared to be scaleable to the lesson level. We have included in our analysis all 12 processes, since valuable information was collected even from the course-level planning.

Analysis of diagrams

Cue card usage

In analysing how the planners had used the cue cards, we were interested in the following:

- Which cards were used verbatim

- Which cards were not used

- Which cards were used but amended, and what we could learn from the amendments

- Whether any additional cards were created? If yes, we would try to map them to existing Phoebe components, and consider whether any components needed to be added, or existing ones elaborated.

The following table shows how the cards were used:

| Component | No. of participants using it verbatim or with amendments | Additional material supplied by participants | Considerations for the future design of Phoebe |

| Pre-defined on cue cards: | |||

| Learning activities | 12 | ||

| Learning outcomes | 11 | ||

| Content | 10 | ||

| Other staff involved (teaching, support etc.) | 10 | Consider staffing resources; Include in-company mentors (WBL) | Need greater awareness of WBL in Phoebe |

| Resources/Tools? | 10 | ||

| Aims and objectives | 9 | ||

| Assessment criteria | 9 | Link to learner profiles | |

| Reflective activities | 9* | ||

| Teaching approach | 9 | ||

| Accessibility | 8 | ||

| Assessment activities | 8* | ||

| Individual work/group work | 8 | ||

| Learning styles | 8* | ||

| Contingency plan | 7 | ||

| Curriculum | 7 | ||

| Location | 7 | ||

| Preparation by students (e.g. advance reading) | 7 | ||

| Timings for activities (start/end; duration) | 7* | ||

| Differentiation | 6 | Scope activities according to learners/groups | Already supported, but check |

| Extension/Reinforcement/Remedial? activities | 5 | ||

| Prerequisites | 5 | Learners’ knowledge within context of the previous & next stages in students’ learning | Include in learner characteristics? |

| Post-assessment feedback to students | 4 | ||

| Components added by participants: | |||

| Scheme of work/module overview | 2 | Investigate whether Phoebe, as it stands, could be used for planning at the SoW level. S/be possible, but would "activities" would have to correspond to individual sessions. | |

| Design team discussions | 2 | Concept of Phoebe as community artefact and implementation in wiki technology supports these implicitly. | |

| Evaluation & reflection | 2 | Already supported | |

| Method of delivery + no. of students | 1 | Already supported | |

| Apprenticeship model | 1 | Already supported in models of learning | |

| Work-based learning | 1 | Need more awareness of WBL in Phoebe: incl. in models of learning? | |

| Informal learning | 1 | Not clear from the diagram what this relates to, but maybe remind teachers (in learner characteristics/transferable skills segments) re role of informally acquired knowledge in shaping the learning experience (draw on common fund of knowledge in explanations/discussions?) | |

| Follow-on activity | 1 | Build into advice/prompts on activity sequences? |

- Includes explicit mention of this component on a different card.

A total of 19 additional cards were created, 17 of which could mapped either to the pre-defined cards or to other components which were already in Phoebe but had not been included in the pre-defined set (for reasons explained above). As the table above shows, the components of Phoebe would have covered most requirements of the planners in the activity. We do not consider it advisable to omit the least-used components, since a) participants were not evenly spread across the sectors and b) these components are known to be important from our previous work.

Structure and flow in the planning process

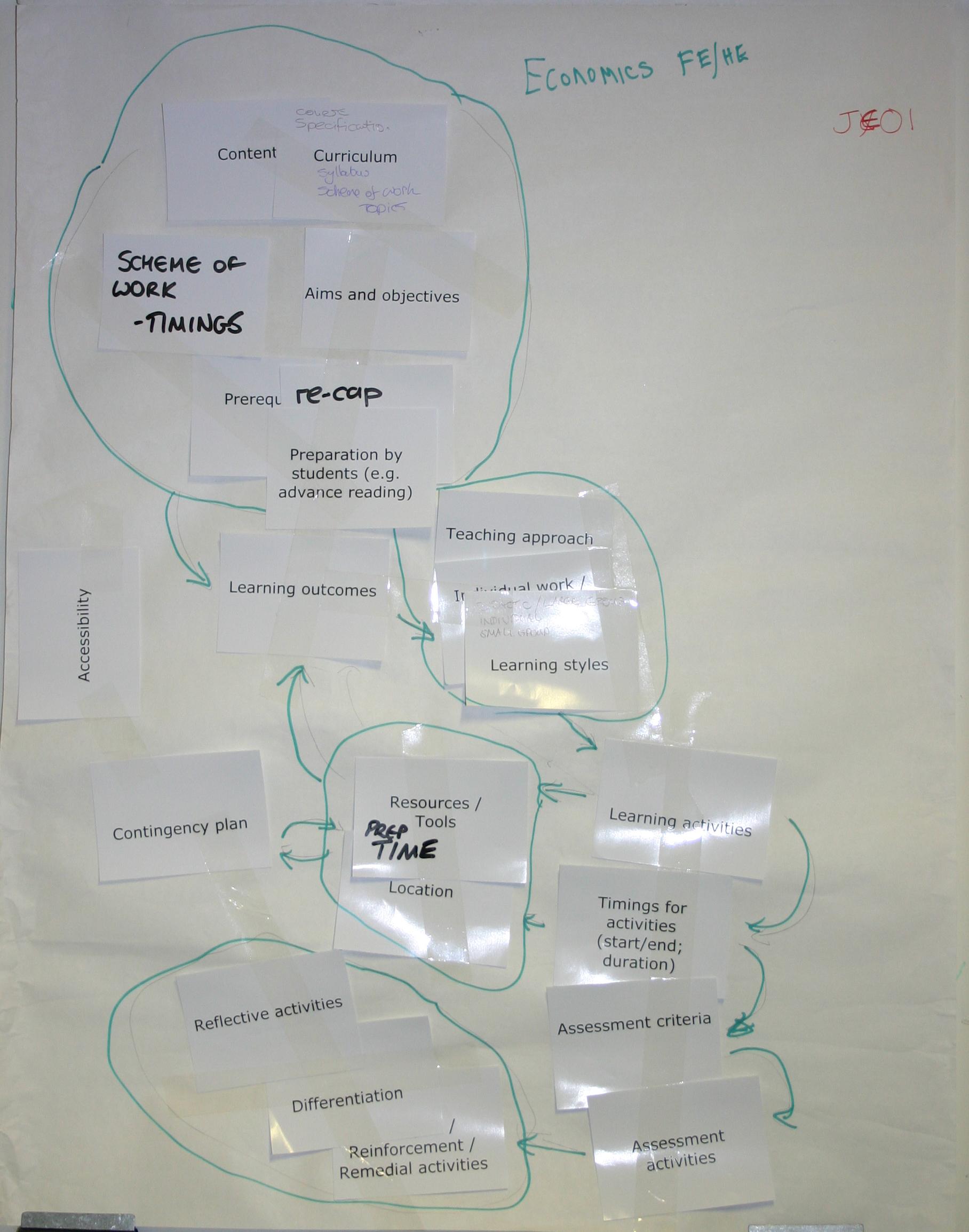

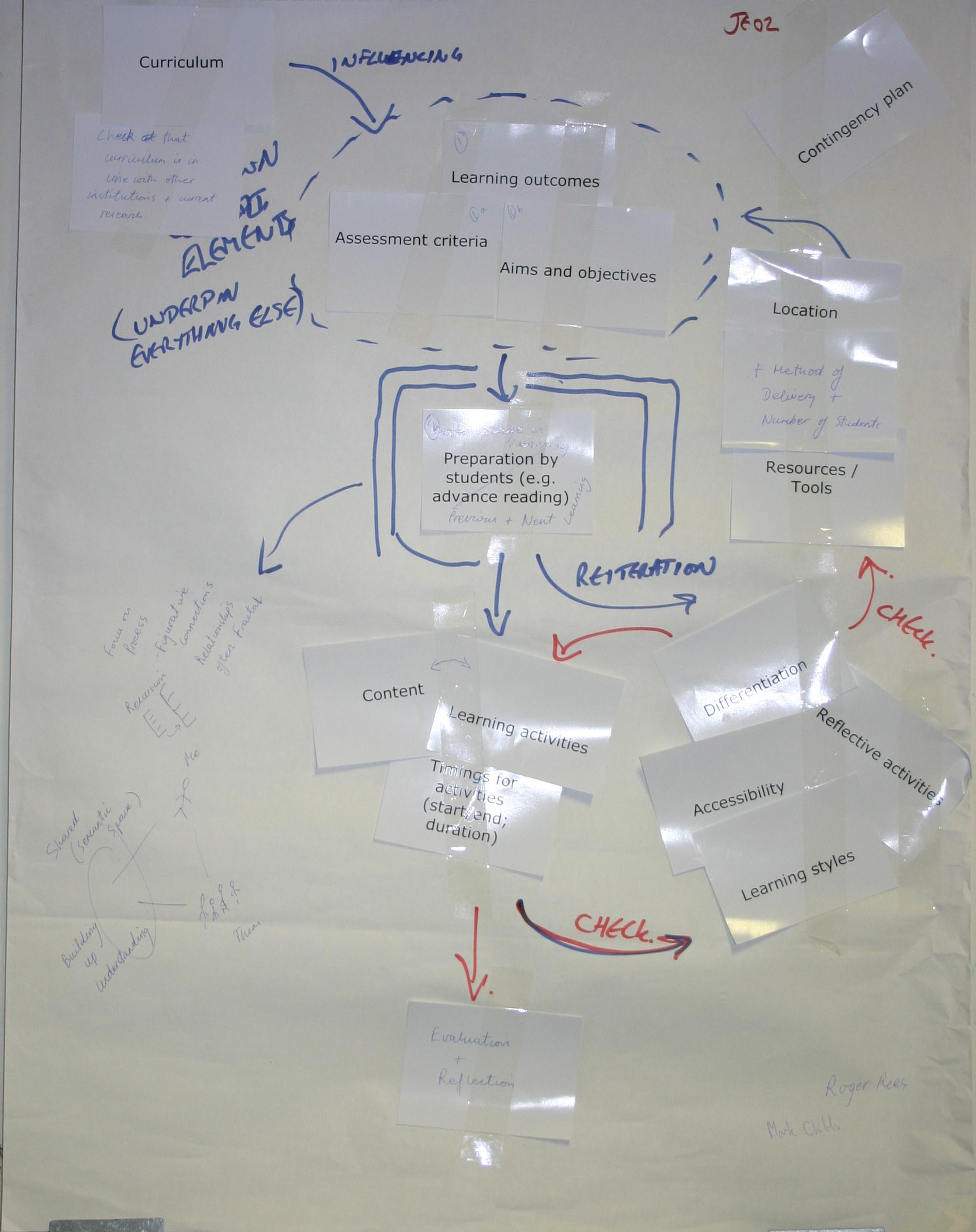

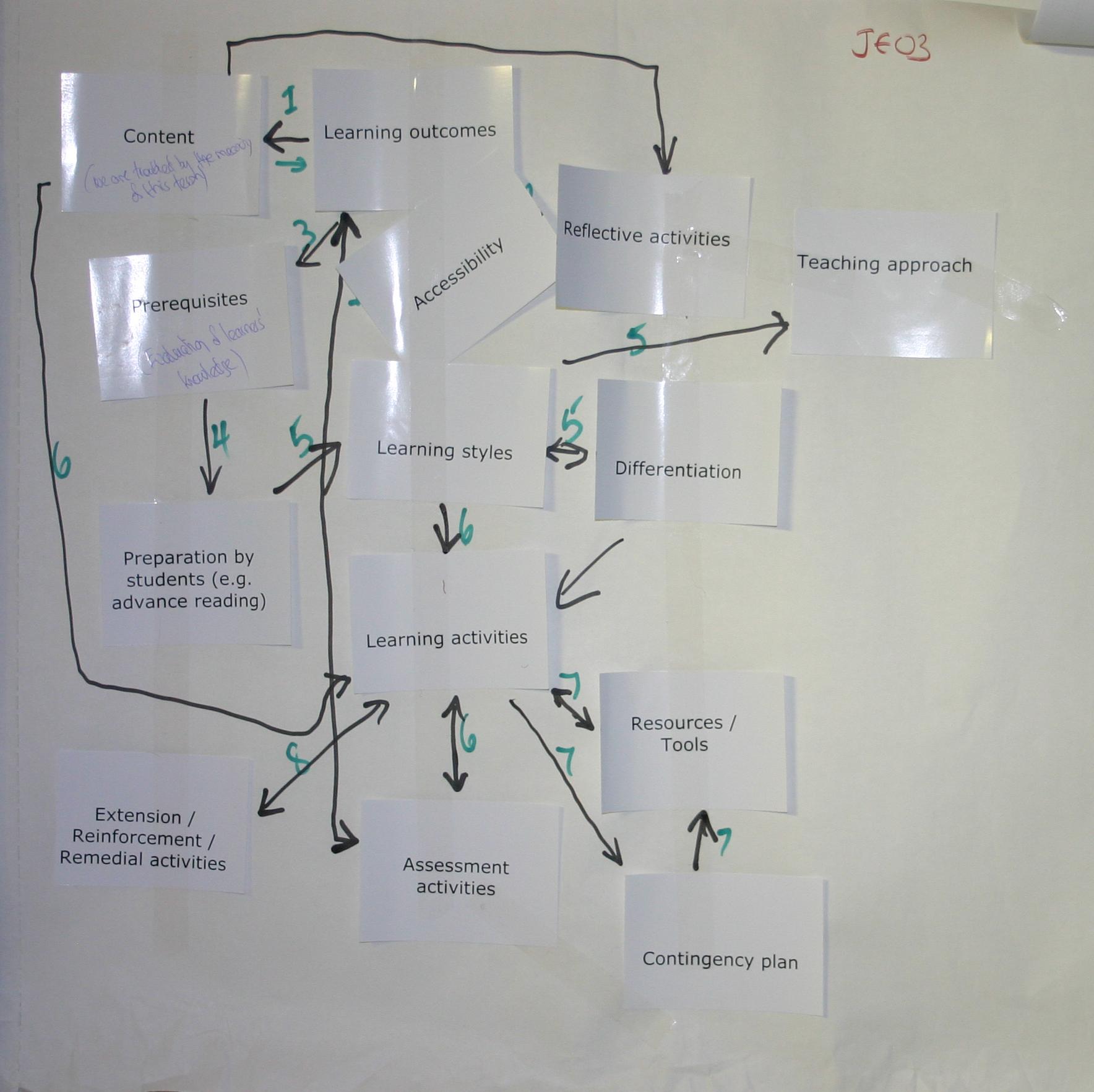

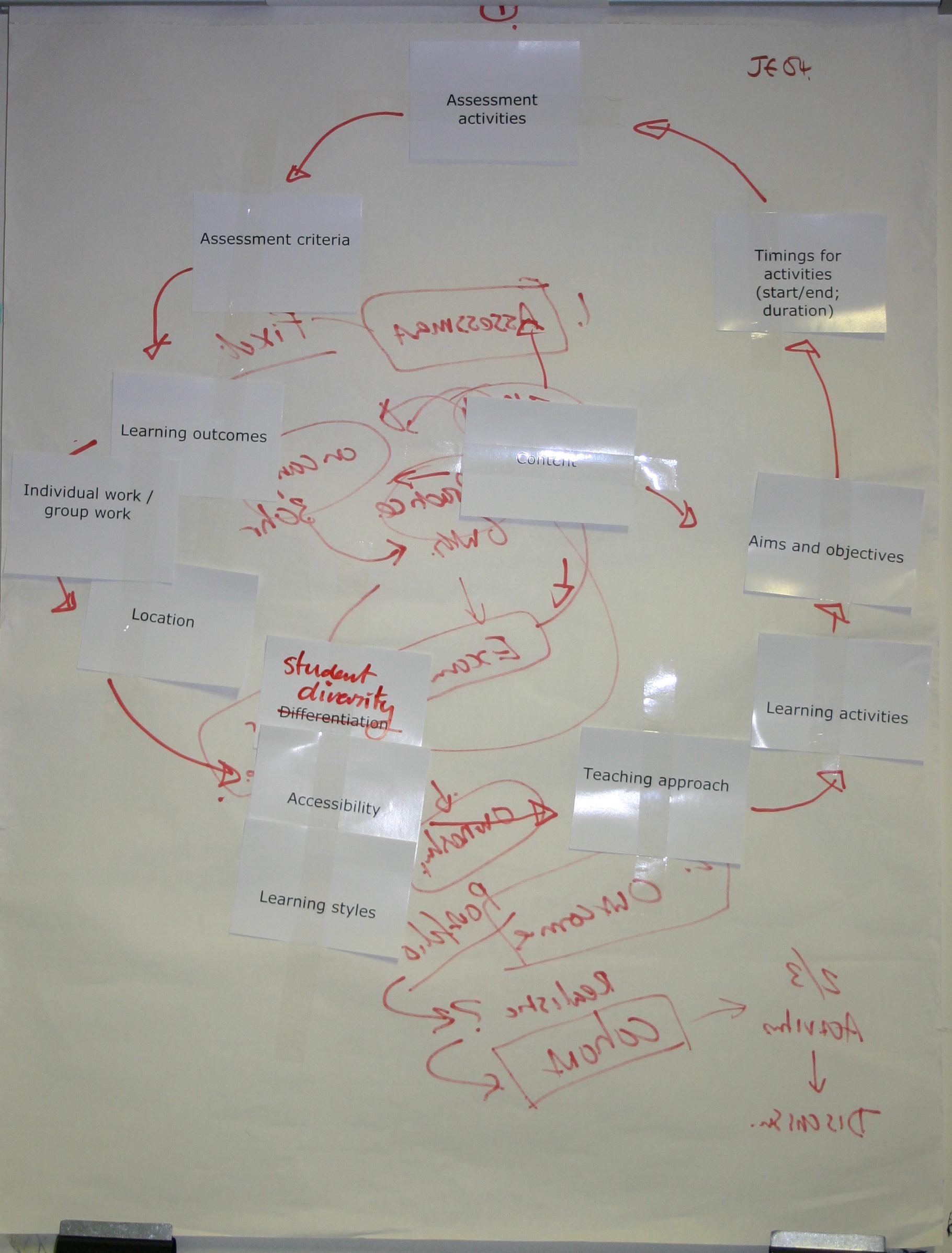

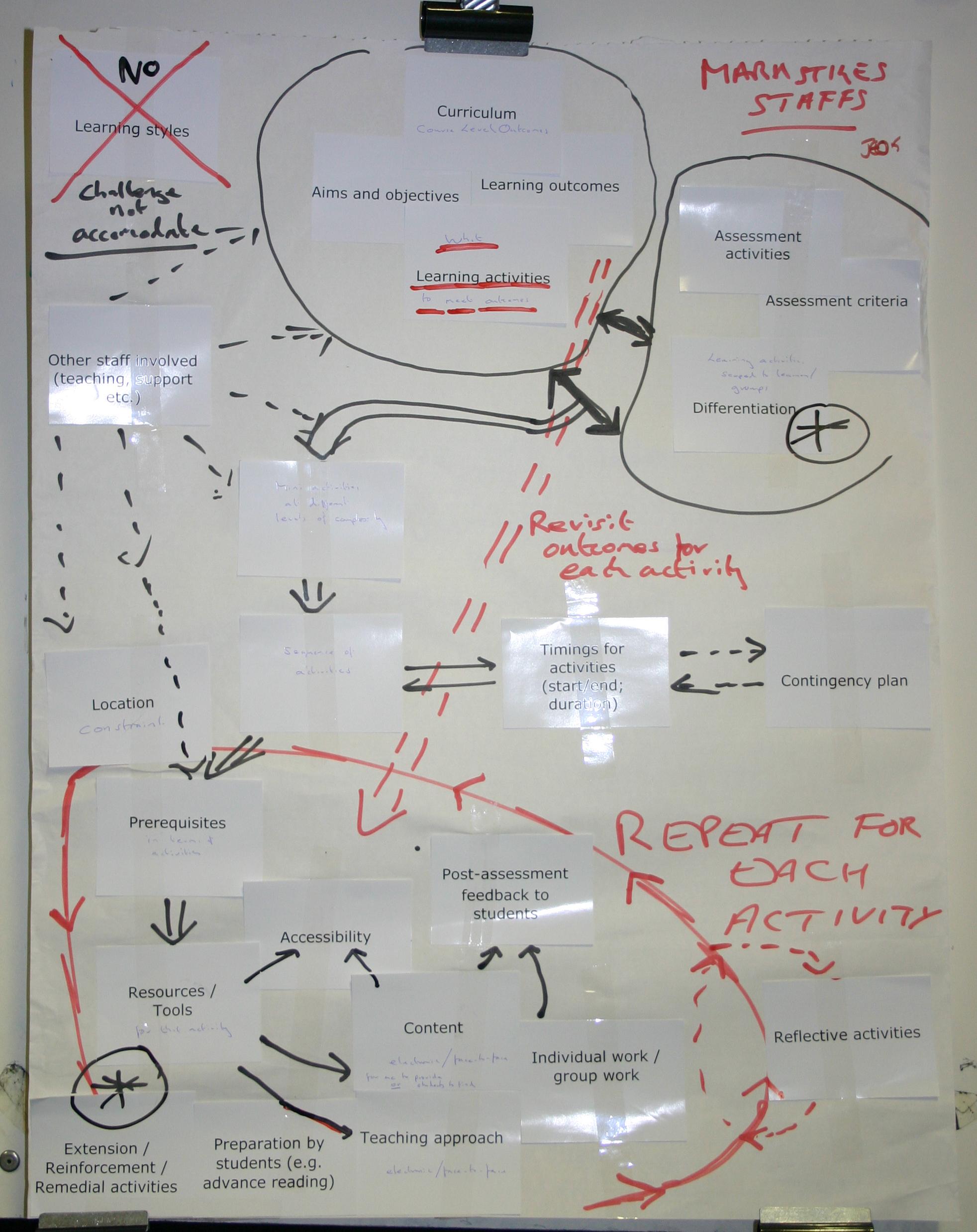

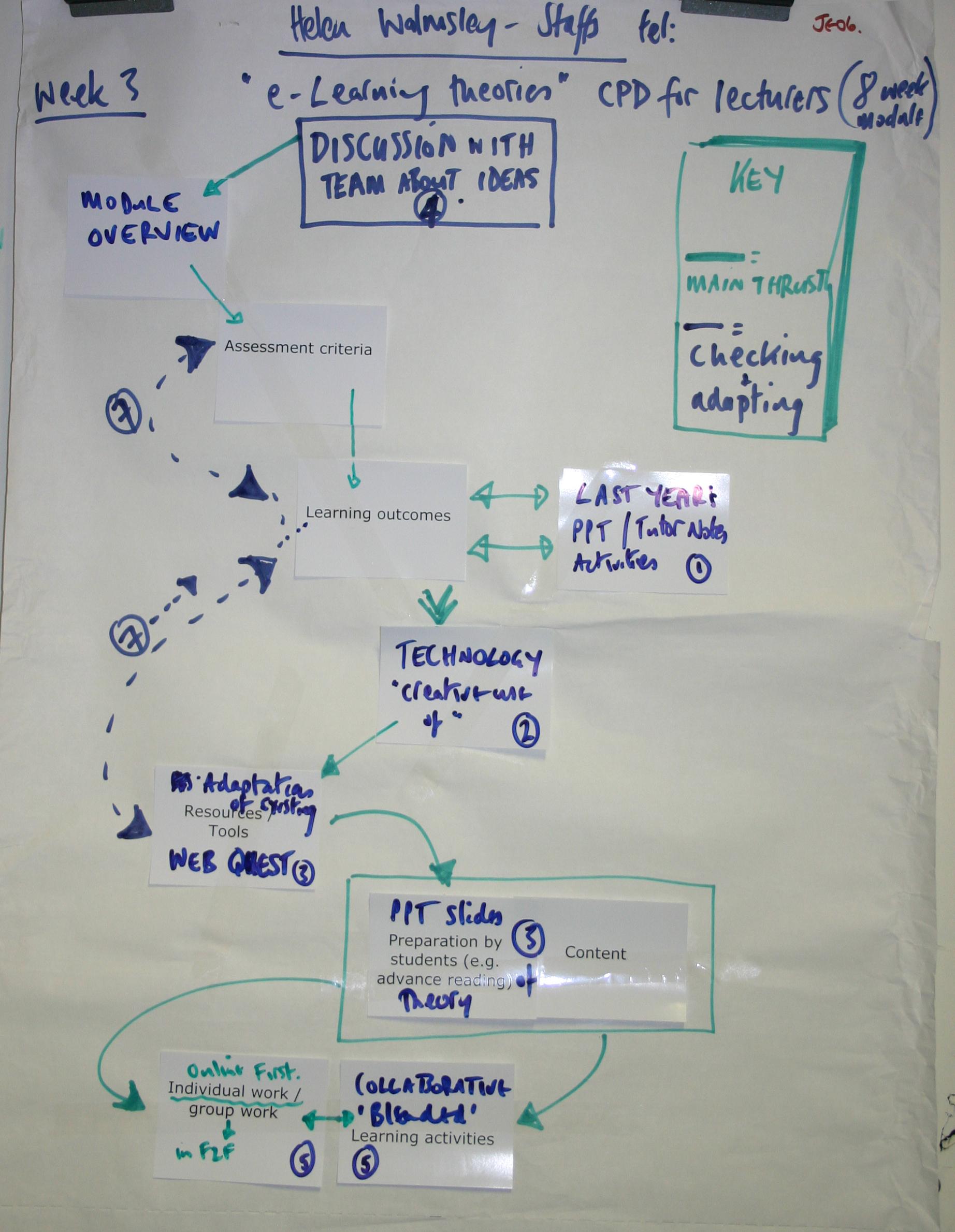

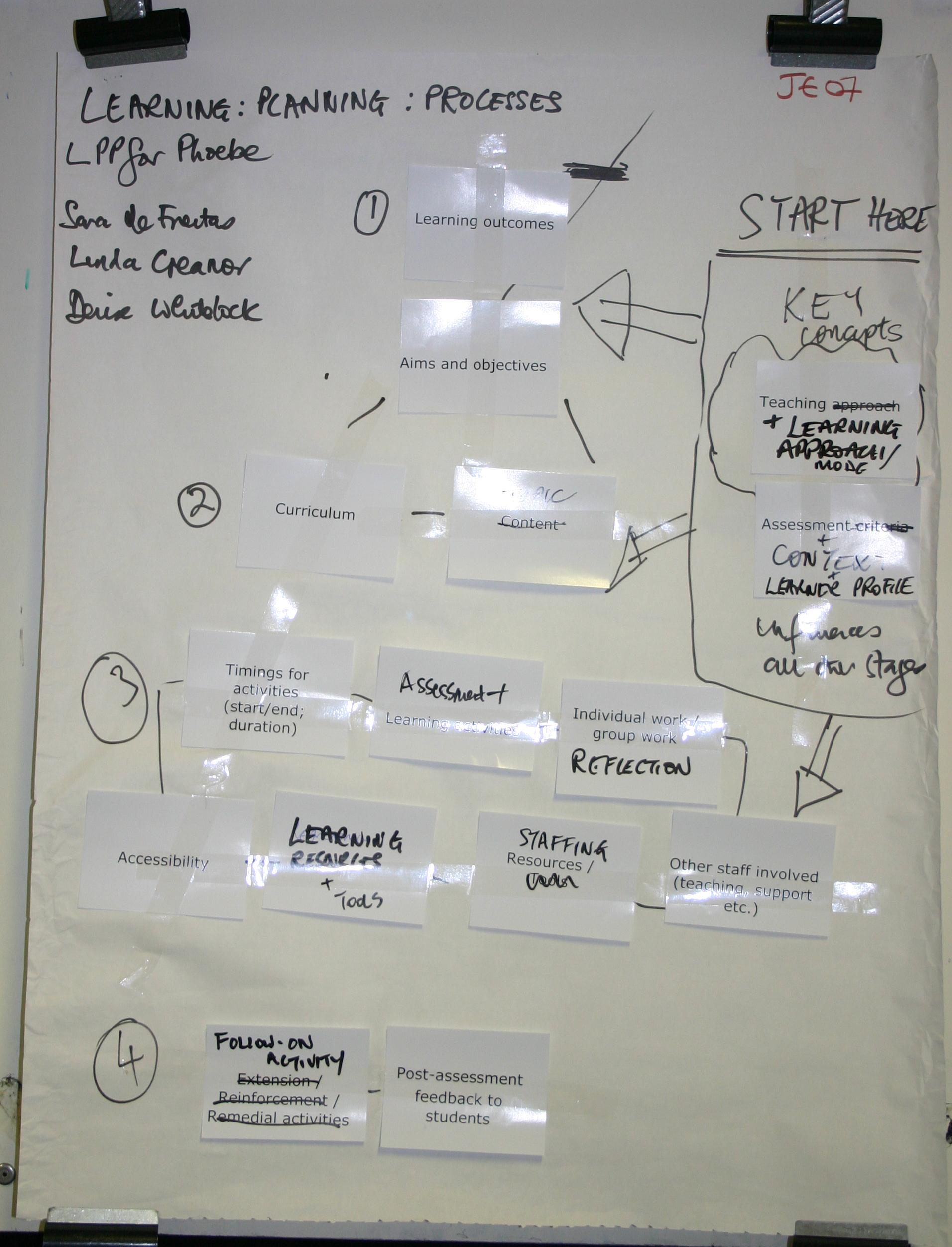

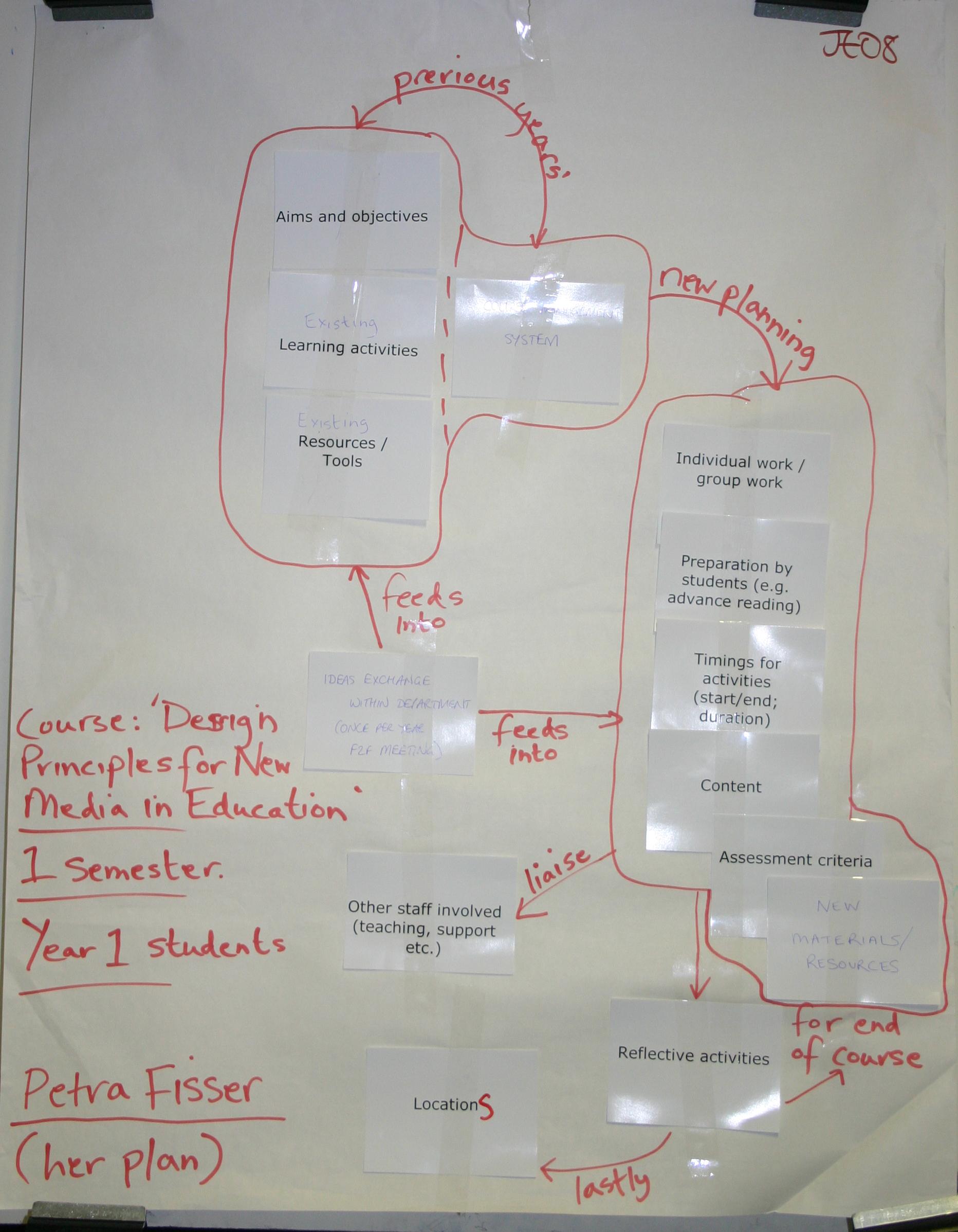

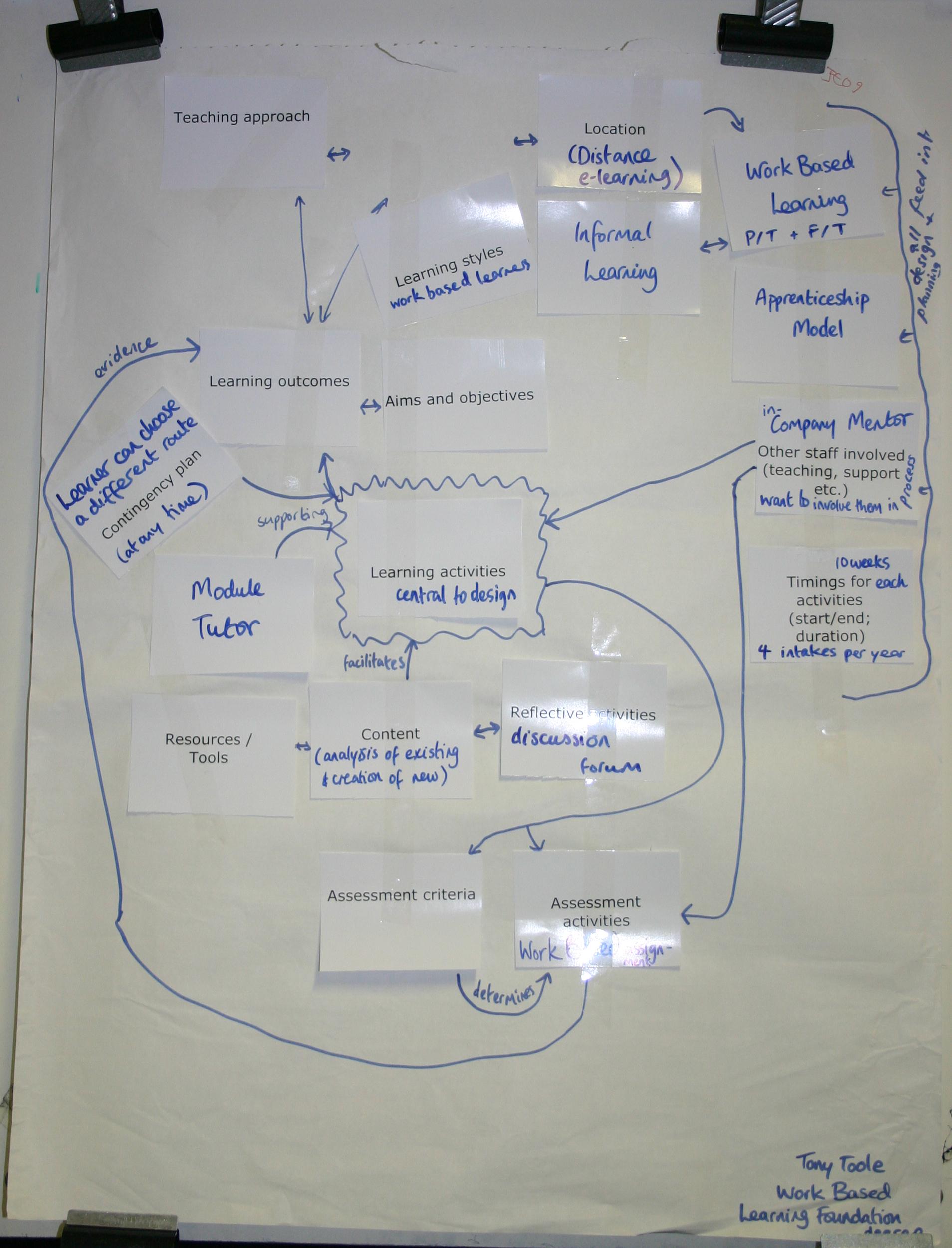

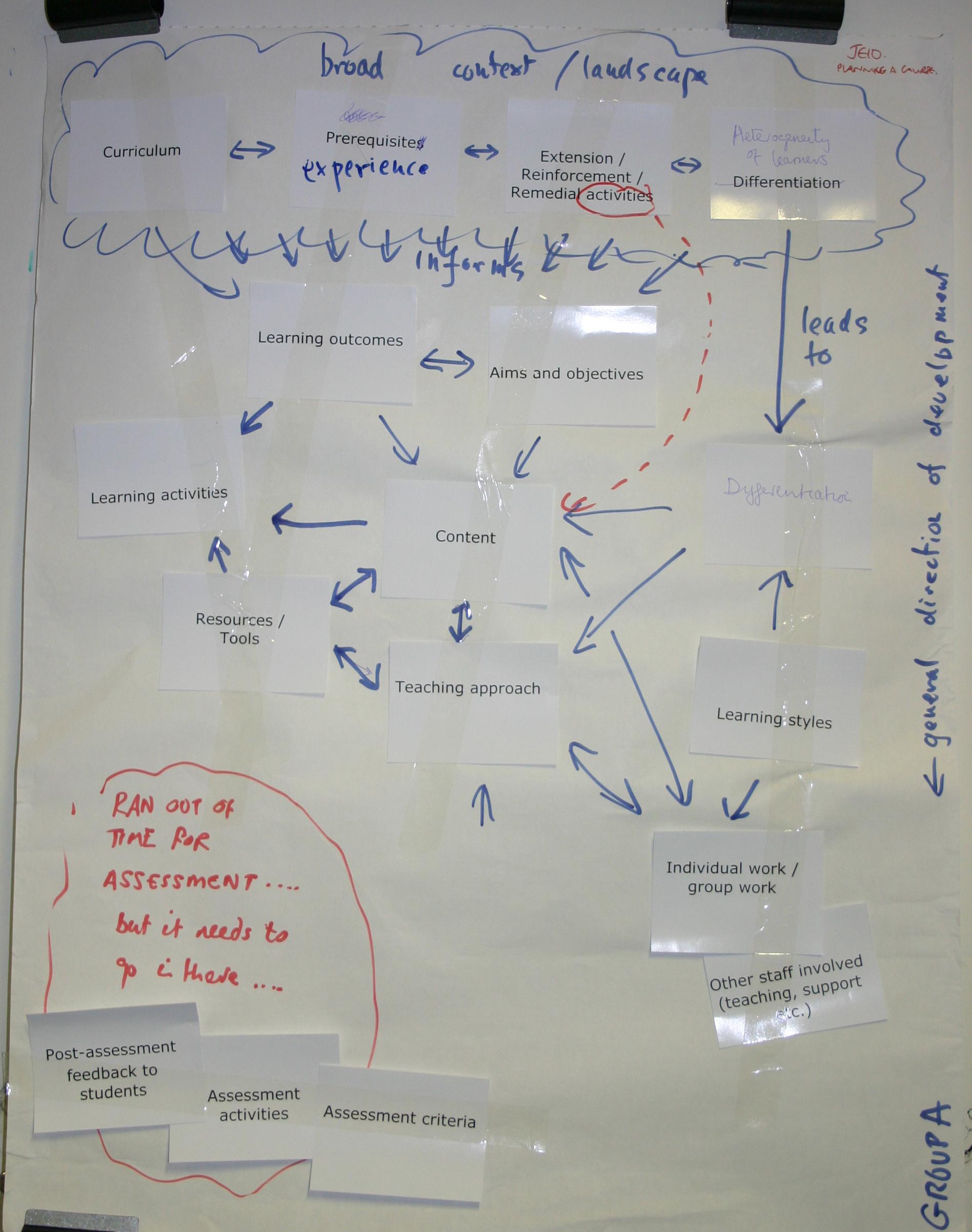

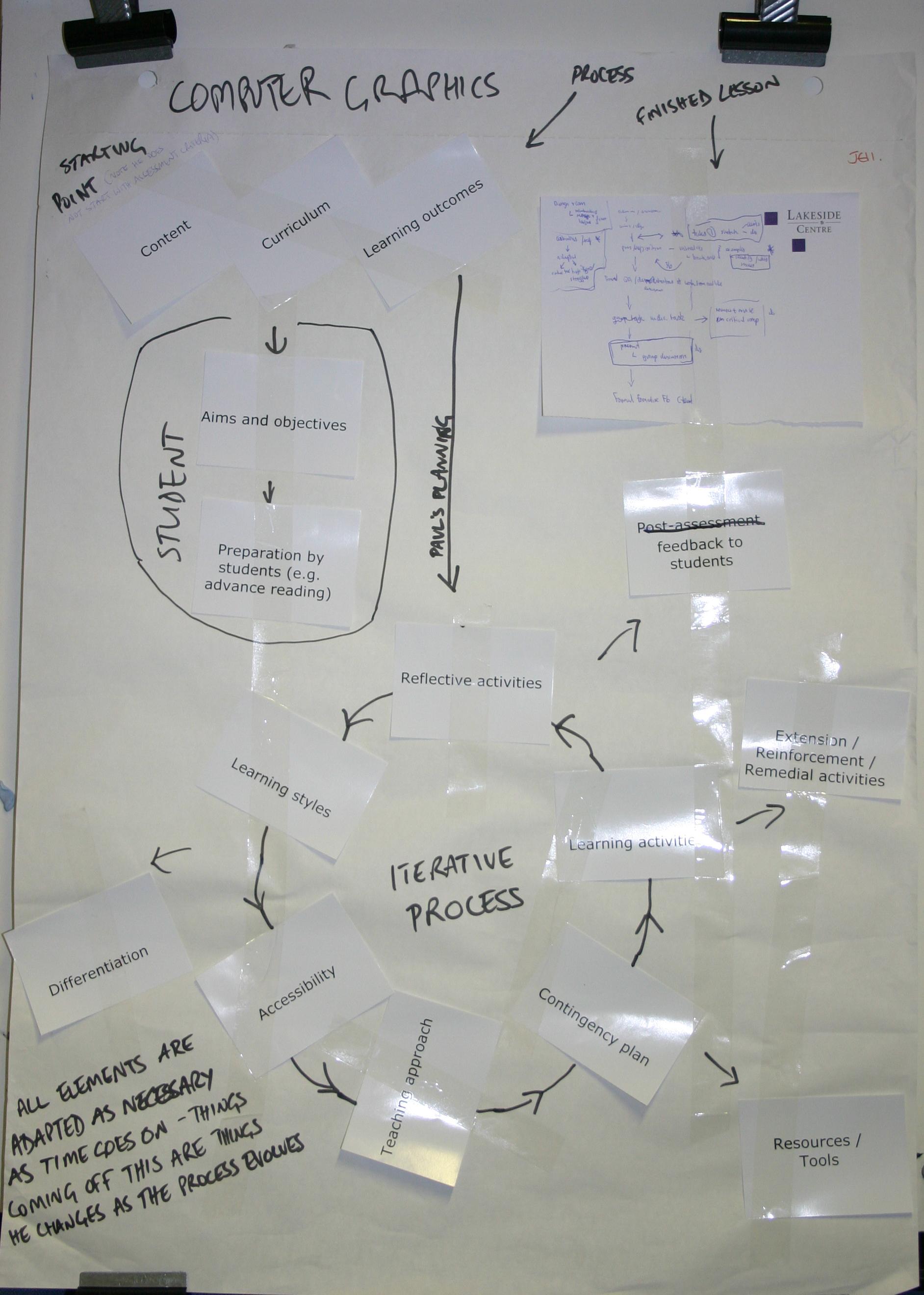

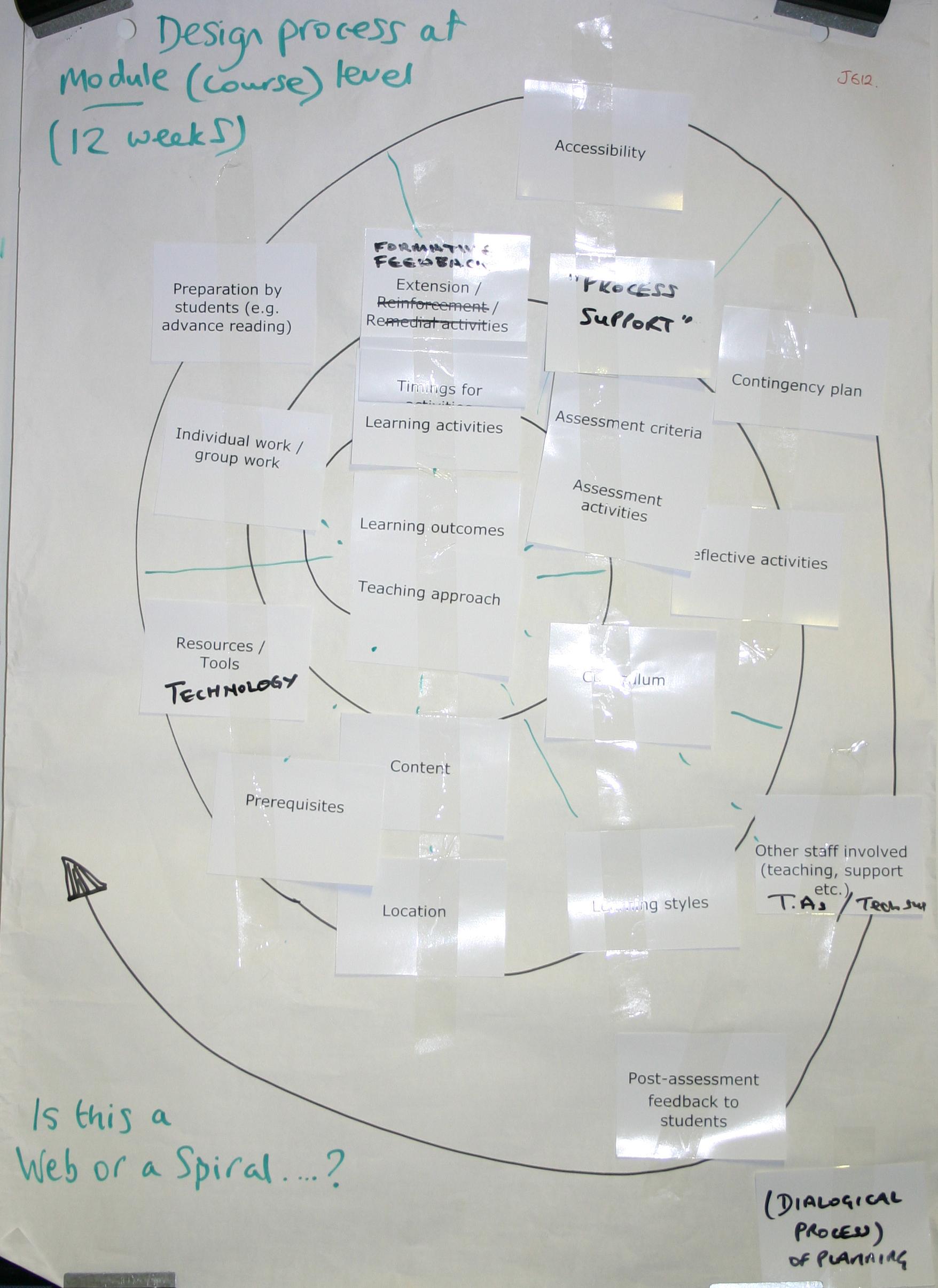

Photographs of participants' diagrams are included at the end of this report.

Visually, the diagrams were extremely varied, but although they all had a starting point (of one or several related components) and an end-point, very few had a clearly linear structure (an exception is JE06). Some had very clear iterative cycles (JE11), while others were driven by a strong central focus, such as learning activities (JE03) or content (JE10). In at least one (e.g. JE07), planning was underpinned by a core cluster of components. The most unusual structure was a spiral — or was it a web? The planner (JE12) couldn’t decide!

Rather than attempt to categorise diagrams according to their abstract structure, it is perhaps more instructive to consider the language in which the planners described the flow of their task and the relationships among components of the lesson plan. These were captured in responses to the structured questions and, in some cases, recorded as annotations on the diagrams themselves. We have grouped the relevant utterances and annotations into five categories:

| Category | Terms |

| Directional (flow) | Starting-point; main thrust; feeds into (2); leads to; general direction of development; lastly; evolves. “If you start with content [a] more iterative approach [is] needed. Pedagogic model and context for learners are the main drivers for planning” (JE07). “Many issues in practice are considered together not sequentially e.g. assessment, activities, timings, content & tools” (JE08). |

| Iterative: | Reiteration; check (2); revisit; repeat; iterative process |

| Qualitative | Underpin; influence (2); supporting; facilitates; determines; evidence; informs. “The known elements — curriculum, learning outcomes, aims & objectives and assessment criteria — underpin everything else” (JE02). “Balance of constraints is the pragmatic guiding course design” (JE07). |

| Communicative/collaborative | Liaise; dialogic process |

| Imagistic | Broad context; landscape; web; spiral |

These categories not only reinforce the non-linearity of the planning process, they also suggest that relationships among components have a semantic richness which may be individual to each practitioner, in that one person’s “leads to” may be another person’s “informs” (i.e. is more than a sequential relationship and implies some sort of enrichment of understanding). In terms of designing a pedagogic planner tool, therefore, they further counsel against a prescriptive approach.

Associations among components of a plan

“Association” refers both to the consideration of thematically related components (e.g. assessment criteria and the design of assessment activities), or components which are in a relationship of dependency or influence (e.g. learning outcomes and activities; location and tools). In designing Phoebe, we have grouped what we consider to be thematically related components under the same main heading (e.g. “curriculum & learning outcomes”) and used hyperlinks in the guidance for each component to indicate where it might be expedient to consider another component when making planning decisions for the current one. We therefore needed to determine the extent to which the associations that we have identified map to the associations made by practitioners “in the wild.”

Disentangling the associations on participants’ diagrams was, as expected, an intricate process, and we do not claim to have captured every one. Associations seemed to be identifiable either from the spatial organisation of cue cards (e.g. by clustering thematically related cards) or using notation such as circles (for thematic associations) or bi-directional arrows (for relationships of dependency or influence).

Neverthelss, we identified from the diagrams the possible need for a number of closer associations within Phoebe; specifically between the following pairs of components:

- Learning styles + Teaching approach

- Learning activities + Accessibility

- Learning activities + Aims & objectives

- Learning activities + Content (perhaps through models of learning, tools?)

- Learning activities + Learning styles

- Learning activities + Location

- Learning activities + Other staff involved

- Learning activities + Teaching approach (perhaps through models of learning?)

Implication: since there is a practical -- and pragmatic -- limit to the number of cross-references (links) that we can maintain, we intend to explore the usefulness of tagging as a means for users to create their own meaningful connections within Phoebe.

Responses to structured questions

Below is a summary of the responses to the structured questions, together with notes for their implications for the design of Phoebe. Although verbatim quotes from the structured questions are included in the reporting of results, we should bear in mind that these may be paraphrases or summaries of the planner's original utterance written down by another party, and thus may not be a true reflection of the speaker's actual words. Nevertheless, we will take them at face value.

- Does the process include looking at, or using, an existing plan while creating this one?

Seven practitioners responded in the affirmative, of whom four explicitly stated that they would look at the previous year’s plan for this session (“and learning from what went wrong”). For one of these people, the task was facilitated by the existence of a CMS. The pre-existing plan did not necessarily have to exist as an externalised representation: one person noted that he planned “more using a generic approach based on experience,” while another referred to consulting “an internalised plan.” However, in answer to the next question, this last person admitted that he might look at his own previous designs, designs produced by other institutions, or even theories, in order to verify his own.

Implications: Transferring existing plans to a Phoebe format could be an issue (but not for the initial prototype)

- Does the process include searching for examples or case studies of the creative use of technology by other people?

Seven affirmative responses were received that directly addressed this question. There was no consensus on sites consulted, and only one person referred to looking at colleagues’ material; all the others cited academic or popular Web-based resources such as “Shiraz” [sic: this may be the Shiraz University of Medical Sciences), JORUM, the University of Indiana’s plagiarism site, the BBC, Guardian and Google.

Implications: Phoebe is expected to include examples of creative learning designs, together with links to appropriate repositories; however, comprehensiveness of coverage and relevance are continuing questions (see data from PI interviews).

- At what point in the planning process are support materials (e.g. handouts, reading lists) created?

Responses appeared evenly split among the three suggestions given: viz. at the beginning, while planning each activity and at the end.

Implications: Invite teachers to insert links to materials stored online or in shared workspaces.

- Does the plan include allowances for unforeseen situations such as student-initiated digressions or technical problems?

Almost all practitioners appeared to plan for contingencies at some level, even if it only amounted to “thinking through alternatives at the back of [my] mind.”

Implications: None. Contingency planning is already supported at both the “global” and activity levels, and this question was merely to check that practitioners do it at some stage.

- Does the plan have to be submitted to others for approval (e.g. to head of department, mentor)?

Six practitioners had to submit either the plan for an individual session or the overall course/module plan for approval. One person simply had to co-ordinate his teaching sessions on Master’s-level courses with those taught by his colleagues in order to ensure that the students had “a well integrated experience.”

Implications: Include reminder in Phoebe.

- Looping back (iterations). Are there any points in the planning process where it might be necessary to go back and revisit earlier stages in the planning process?

Eleven affirmative responses were obtained, including the observation “Whole process iterative. Not appropriate to linear plan.”

Implications: None. This merely reinforces one of our fundamental understandings of the planning process.

- Do any aspects of planning run throughout the process: i.e. alongside the others?

Aspects that can run throughout the process include reference to the scheme of work, aims, learning outcomes, assessment criteria and dialogue with others (and probably a number of others which were recorded directly onto the diagrams).

Implications: Unclear whether this process is an internal/mental one, or whether users would want to be able to refer back to and/or refine the component in question throughout.

- Did you develop this plan in collaboration with others?

About half of the practitioners engage in some form of collaboration, ranging from discussion with colleagues to a complex negotiation with 15 other institutions to design 24 modules.

Implications: Phoebe is explicitly designed as a social tool; explore its usefulness in supporting collaboration as part of the evaluation.

- If you plan learning sessions in collaboration with others, what tools do you use to share the draft versions, comment on them and generally communicate with the other members of the team?

Not many answered this question, but there remains a substantial reliance on F2F meetings and use of pencil and paper. Word and email are the principal e-tools used.

Implications: Interesting that no-one appeared to use a wiki! Use of Phoebe as a collaborative planning tool should form part of the evaluation.

- What tools are used to produce the final plan?

Mostly Word, although mind-mapping and pen-and-paper also feature, as does structuring the learning session directly into the VLE or CMS. In one case, there was “no final lesson plan because it was embedded in the activity tasks given to students.”

Implication: Some form of semi-structured output from Phoebe into Word is a must; equally, need to recognise that not all people will want to produce a plan within Phoebe.

- Please indicate where your finished plan would lie on a scale of 1 to 7, where 1 = Rough notes (“back of an envelope”) 7 = Formal structured plan laid out in a format specified by your college/university

Eleven responses were received, grouped as follows:

| Rating | No. of respondents |

| 7 | 2 |

| 6 | 2 |

| 5 | 5 |

| 4 | 1 |

| 3 | 1 |

These ratings are slightly lower than those obtained from the larger sample in the LD Tools project (perhaps unsurprisingly, since in LD Tools FE practitioners tended to produce more formal plans than those in HE, who predominate here), but they still lie towards the "formal" end of the continuum.

Implications: As for the previous question.

Thought-provoking comments

Some contributions captured in the structured questions and in Jane Plenderleith's summary of the session particularly caught our eye during the analysis. We reproduce them here, together with our responses:

- "The words (blocks) given out at the start focus on what teacher does (lesson planning) not what learner does (design for learning)." In a sense, that isn't surprising since, even though D4L is about activity-centred active learning, those learning experiences have to be planned for by the teacher – at least, when they take place within a formal educational setting. BUT the distinction between "lesson planning" and "design for learning" is a crucial one to make, and one which the D4L community hasn't yet fully articulated. We had an inconclusive discussion at the Experts' meeting back in June, in which possible distinguishing factors were aired, e.g. "design" implies greater creativity/artistry, or LD/D4L is specifically about the creative use of technology (which might falsely imply a superiority over non-technologically mediated learning experiences...?). In defence of the continued use of "plan" in relation to learning experiences, we would also point to evidence from previous projects – the LAMS trial and eLISA – from practitioners themselves, stating that introducing (new) technology into teaching and learning has forced them to plan in ways that they hadn't done for years. Here's the testimony of a teacher from eLISA:

“[LAMS] fundamentally made me think about what I actually do in the class […] when I first started the sequence I started to really think […] about how am I going to project what it is that I give to a lesson when I’m face to face on this screen, yeah? […] What it brought me back to was the actual lesson plan, you know, like when you first started off […] it was like that all over again.”

And finally, if planning isn't relevant in D4L, then why has JISC commissioned two pedagogic planner tools?

- "If something is constructivist in approach it's more difficult to capture in a plan, especially if it's to be shared mainly electronically or out of context."

Hmmm...others have successfully planned constructivist learning experiences. Perhaps it depends on one's interpretation of "constructivist." Constructionist may be a different matter, however... See http://www.papert.org/articles/SituatingConstructionism.html.

- "There is no final lesson plan because it was embedded in the activity tasks given to students. […] The plan 'became' the activity but the overall plan was broken down in other documents and processes."

This is an example of what Mira Vogel and Liz have called "dispersed" learning designs. Although it's perfectly valid for the practitioner's own purposes, it can cause problems if other people want (or need) to make sense of it for any reason. This is another argument in favour of producing explicit, if not actually formalised, learning designs: namely, reusability.

- "Much planning is implicit and not necessary to articulate it. The act of breaking it down and itemising considerations is only ever done for activities like these!"

We'd like to append the following: "or when introducing a new dimension into the learning experience." See the quotation from our eLISA teacher! But this statement, together with the preceding one, raises another challenge to capturing learning experiences as (reusable) designs: the completeness with which we can represent them.

- "My planning is sometimes content, explaining a process […]. That doesn’t fit the cards."

And that's perfectly valid as far as we're concerned. Phoebe isn't going to suit everyone; as a participant in the LD Tools project sagely observed back in the summer of 2005:

“Maybe it’s going to be difficult to develop a single software tool kit that suits everybody’s preferences for planning learning (paper based, software or a mixture of both!) and maybe it could be useful to develop flexible software tools that support teachers through the “process” and stages of designing for learning…”

Concluding notes

We haven't written a lengthy discussion for this report, as we have simply noted the implications of each result for the future trajectory of the Phoebe project as we have gone along. However, overall we feel that the activity has underlined some of our original decisions (although that isn't a cause for complacency!), challenged others and indicated some gaps to be plugged. We will come back to this report as we work on the evaluation version of Phoebe over the winter, to review the implications raised by the data collected and decide how to implement them in the tool.

Finally, many thanks to the 40+ experts who took part in the activity!